I — like many others — feel compelled to comment on the advent of ChatGPT, large language models, and the recent call by many prominent experts for a six month pause on this work (a "nice" but infeasible proposal!)

First, here is the link to OpenAI and its product ChatGPT. Sign up now! It's free — this is pure upside! Ask ChatGPT your toughest questions and marvel at its replies. (Or, ask it to write code for you; it's a coding genius. It places in the top 10% for conquering coding problems given to applicants for Silicon Valley jobs.) By the way, it does better on the uniform (simulated) bar exam than 90% of puny human applicants.

But, it's so good and so obviously a tool that might be (and will be) misused that it's prompted thousands of well-meaning souls to sign a letter calling for a six month pause in the development of large language models.

Here is the letter from the Future of Life Institute (a well-intentioned bunch) calling to Pause Giant AI Experiments. My bottom line is this: a pause of work on large language models is infeasible, unworkable, and can't be monitored. This is anguish and (partially) virtue-signaling and other motives over some issues that are so obviously meritorious as to be non-controversial. The proposal has non-zero merit, but a pause won't happen and, if it does, it won't accomplish much.

The letter asks: "should we let machines flood our information channels with propaganda and untruth?' I'm going to go out on a limb and say "no." Flooding info channels with untruths is a bad idea (unless you're Putin or other politicians.)

The letter asks: "should we automate away all the jobs, including the fulfilling ones?" Again, my answer is "no." (I recently had a plumber diagnose and fix a leak by carefully ripping out drywall, then lifting up a toilet, and replacing a worn gasket. Just let me know when your large language model can do that! It can't and won't (before 2030.))

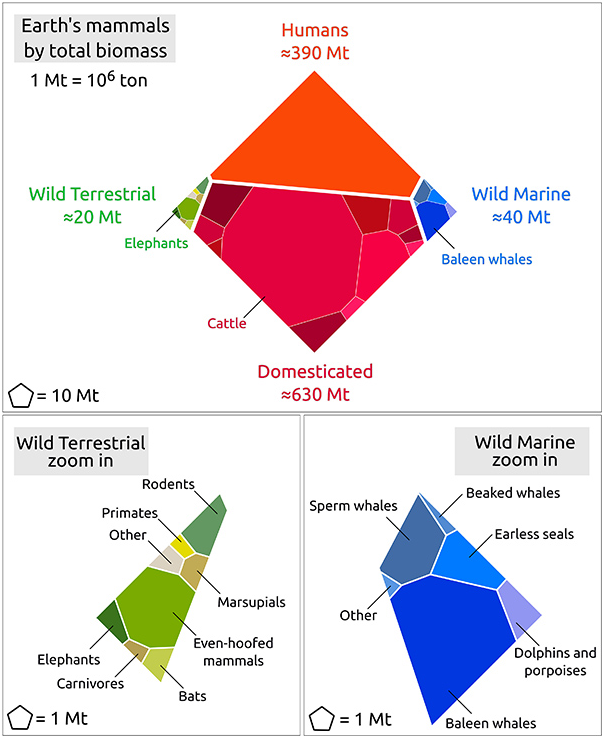

Humanity's "gift" to the living world: nuclear weapons and global pollution

"Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? I pause somewhat before answering. I previously took a stab here: The Future of Humanity. Here's a summary: 1) humanity has been a total disaster for non-human creatures, and if we were wiped out, wild creatures would thrive. 2) We have polluted and destroyed the biosphere. If non-human species get lucky, perhaps Earth will get rid of us. Nonetheless, there's a trace of good in humanity and wiping us out completely and immediately would be a loss. Having tools that are ultimately wiser and more farsighted than us sounds like a great idea. (Unfortunately, those tools don't yet exist.)

From the standpoint of non-human species, humans and cattle are out of control

"Should we risk loss of control of our civilization?" Who's the "we" and the "our" here? I'd dance for joy if Kim Jong-un, Putin, Bashir al-Assad or Ayatollah Ali Khamenei were bumped off. They torture and murder endlessly. There are far greater threats to "our civilization" than large language models. Billions of people could die in an instant if Putin one morning decides to start World War 3. Did you forget about Covid and the labs in Wuhan? (Covid was just a mild, low-key warmup act. Do you actually think humanity is going to control greenhouse gases before large parts of the Earth become uninhabitable? Civilization has been out of control for centuries (remember World War 1 and 2?)

The CCP welcomes Hong Kong to the Middle Kingdom.

The Future of Life Institute's declaration continues. "At some point, it may be important to get independent review before starting to train future systems, and ... to limit their growth rate." "Governments should step in and institute a moratorium."

Yeah, governments should step in (?!?) Let's start with the leader of the free world: America. Seriously, have you watched our last few elections? We in the US are already riven over control of social media and control of Big Tech. Perhaps, to achieve true independence from US political parties we could empower AI scientists in North Korea, Iran, Russia, or Communist China to do the review. Or if you prefer the review by the US government why not have Marjorie Taylor Greene appoint reviewers starting with Lauren Boebert and George Santos — and bring back Sarah Palin and Louie Gohmert.

The honorable MTG from Georgia, potential AI regulator.

Since Elon Musk is such a strong advocate of regulation, we should start by shutting down Tesla's self-driving research and its Optimus robots program at its Palo Alto HQ. (I'm kidding, Elon, I'm a huge fan of your work at Tesla and SpaceX — at Twitter, forget it! But you can't possibly want more government regulation. ) Sorry, all this naive stuff about government, regulatory bodies, and moritoria just leaves me cold!

Still, the underlying concern in the declaration is well-motivated. I'm ok with the sentiment but this solution is really half-baked. Signatory Professor Stuart Russell in his day job at Cal Berkeley is trying to come up with real solutions to AI alignment (with "universal human values.") BTW, ask Xi Jinping what he thinks of universal human values on your next trip to Tibet or to the Uyghur torture/ concentration camps in Xinjiang.

Why not just support and expand efforts like Stuart Russell's. Every corporation that works on potential AGI has non-zero focus on making the systems reliable and safe — they employ armies of testers and ethicists. Reliability sells! This declaration — other than being a semi-useful warning sign — is fluff.

OK, that's what I wrote a week ago, and I still largely stand by it. But, in the interim I got curious about how others have reacted to the call for a pause. Take a look, eg, at this article, AI Critics call for a 6-month pause in Ars Technica. I was mainly interested in the letters written in response. Here are some of the concepts advanced by the critics.

This just gives the bad guys (or even just the other guys) 6 months to catch up.

GPT has already let the toothpaste out of the tube. Good luck getting it back in again. Pandora's box is wide open.

A 6 month pause is totally arbitrary and not practical or effective.

Readers comment on the extreme irony of Elon "full self-driving" Musk calling for a pause. (If you want more regulation, Texas Ranger Elon, just move back to California!)

Aren't humans already flooding the infosphere with crap? Want to control misinformation on the web? The most effective way to do it will be with "white hat" AIs. (There just aren't enough human reviewers. Russian and Chinese troll farms already dominate many social media outlets.)

Several worriers are concerned about job disruption, unemployment or lack of fulfillment or ultimate human purpose. (I've got no glib answers (but a 6 month pause won't help.)) Maybe, talk to Andrew Yang about UBI. (But, who would've guessed there'd now be a huge labor shortage in the US? This past year there were two job openings for every one applicant.)

Lex Fridman's interview of OpenAI CEO Sam Altman is widely cited (but there are far better YouTubes. See my section I Suggest . . . .) One crucial observation that Sam makes is that GPT4 is not doing scientific innovation. I'm hoping OpenAI (like DeepMind) keeps the pedal to the metal until they succeed at this (developing, eg, new materials and designs for fusion energy; new materials for photonic computing, etc.) Summarizing articles is cute but not earth-shattering.

![]()

Self-driving cars: you think large language models will crack level 5 self-driving. Good luck with that! (They won't — conscious self-driving cars might.)

There is concern that the call for a pause is just a play for publicity. Partial agreement here. These "pause AI letters" surface every few years. I'm always relieved when they die of neglect. (Unlike how I cheered when the US Congress passed the 280 billion dollar CHIPS and Science Act or the 110 billion dollar Innovation Act.) Did I say government was bad? I take it back.)

Supporters of the pause comment "taking time out to reflect might be beneficial as was the gene engineering conference in 1975 at Asilomar. Yeah, reflection is good; safety is good; apple pie is good.

Superintelligence is not just a large language model. Yeah; bring it on! Stochastic parrots are fun and useful, but I like the Feigenbaum test (named for Ed F. one of my Stanford thesis advisors.) Forget the Turing test! You're an AGI when you can do Nobel prize winning work in three separate fields! Wake me up when LLMs get there.

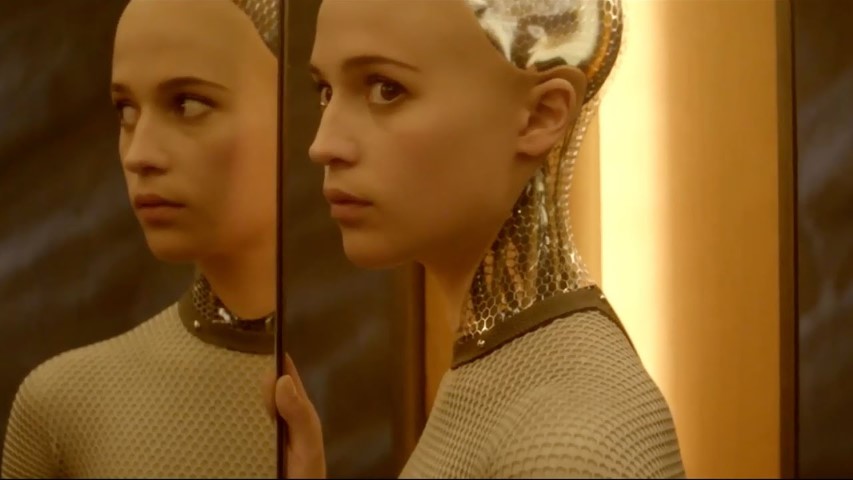

Critics comment on whether or not ChatGPT is (or isn't) conscious. Take it from me; it's not!. But, it might come closer if we expand work on embodiment. LLMs are hard; robotics is harder; and, robots controlled by neural nets are really hard. (But see Raia Hadsell's soccer playing robots at DeepMind.) Roboticists please pour on the steam! The AI community also needs a far greater bandwidth to neuroscience. Meta's (Facebook's) head of AI research, Prof. Yann LeCun is exactly right on this point. Children (and animals) create the basis for their commonsense and their adult worldview based on the interactions of their physical body with the world. It has nothing to do (early on) with language. See MIT Prof. Josh Tenenbaum's lecture. You think your LLM's are feeling? They're not!

Transparency and interpretability would be good. Yeah, but these hunks of metal are already far more transparent and accessible than the tyrants and torturers who are threatening the world. Let's use our new tools to help get rid of the bad guys and their tyrannical governments. (And a 6 month pause won't keep these tools out of the hands of the bad guys.)

Developers of LLMs (large language models) are skilled coders and have a background in multi-level ANNs (artificial neural nets), ML (machine learning), and CNNs (convolutional neural nets.) For the most part they do NOT have a background in cognitive neuroscience. (I've interacted with scores of Stanford's AI students.)

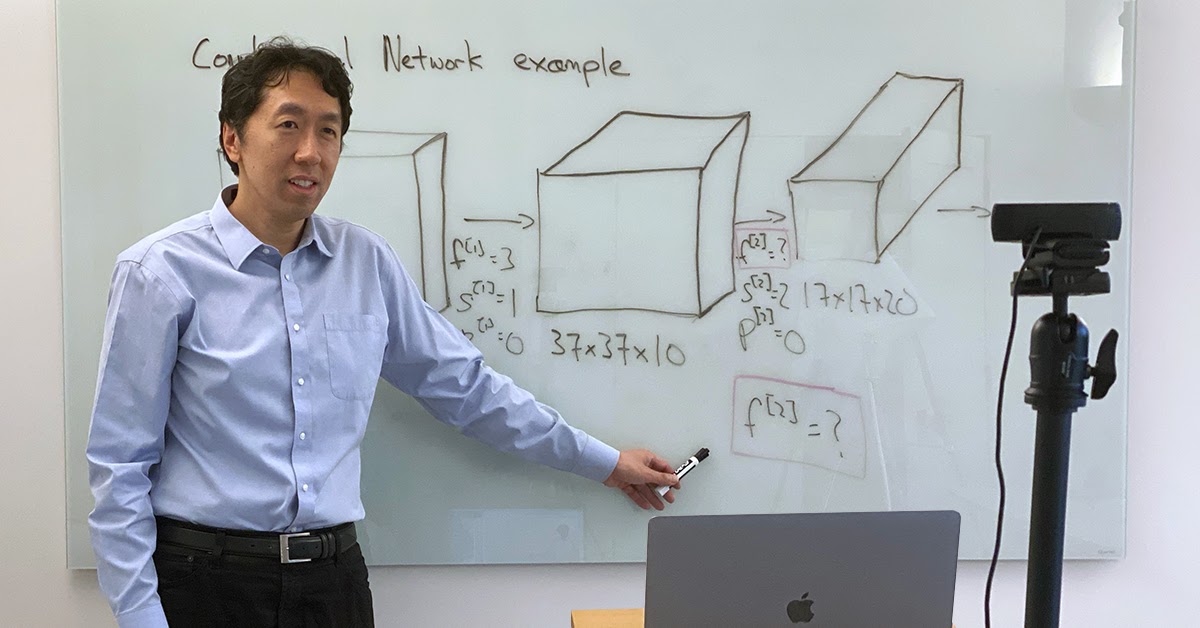

Andrew Ng, pioneer and superstar of AI, is against a pause of AI research.

But, here are two of the world's most famous AI researchers who each also have a strong background in cognitive science. They are Yann LeCun, head of AI research at META (Facebook), and Andrew Ng, formerly head of AI research at Baidu, and a prominent Silicon Valley VC and entrepreneur. In this must-listen YouTube Andrew and Yann tell us Why a 6 month AI Pause is a Bad Idea. In brief, the proposed pause is infeasible, unmonitorable, and otherwise undesirable (an irrational halt in AI progress.) But, having read this far, you already knew that.

If you want to see what's missing look at this superb video by MIT's Prof. Joshua Tenenbaum (a 2nd generation cognitive scientist; his dad, Marty was in my cohort.) Building Machines that See, Learn, and Think like People. This matters because the ingredients that Josh is talking about are crucial for AGI: 1) intuitive physics and 2) intuitive psychology.

But, do the pausers have a point? In this superb interview YouTuber Nathan Labenz tells us his experiences on OpenAI's Red Team. The Red Team is the name for the guys who try to transform the beast that is Raw GPT into the more civilized ChatGPT. Raw GPT blithely tells you how to kill as many people as cheaply as possible. If you want to halt AGI, it will tell you who to kill via targeted assassinations. It will help you formulate deadly viruses. You get the picture (and so did the signers of the pause petition.) Scary stuff. ChatGPT is a lot nicer, chained-up, and dumbed down. Nathan doesn't hold back.

Back on the upside, this interview by Singularity's Peter Diamandis of Stability AI's Emad Mostaque is also quite good. Emad is genuinely concerned about the unconstrained pace of LLM rollout, but both he and Peter are hopeful about the economic effects. Peter is also enthusiastic about the prospects for giving every child on Earth a personalized primer/ tutor (a la Nell's book in Neal Stephenson's The Diamond Age.)

Stuart Russell, superstar who co-wrote THE AI book, signs up for the pause.

As I stated above, among the thousands of Pause Petition signers, the main one you need to take seriously is Cal Berkeley Professor Stuart Russell (and a close second would be Montreal University's Prof. Yoshua Bengio.) Stuart literally wrote the book on AI (along with my friend, fellow cyclist, and co-author Peter Norvig. Peter also happens to be Director of Research at Google (google it!)) The book is AI: a Modern Approach (now in its 4th edition) used by every computer science department on this particular planet. But, if you are a civilian, the book to read is Human Compatible. As Russell succinctly puts it: "how do you assure that ITS objectives are the same as OUR objectives?" Just telling the machine you want world peace won't do it — it could just decide to kill everybody. (Ask King Midas.)

Bill and Elon

And, here's an interview of Microsoft founder Bill Gates by MSFT's current CTO, Kevin Scott. In this hour-long interview I don't think Bill mentions AGI safety concerns once. Why is that? Is it simply because MSFT stands to gain billions of dollars by using AGI? No! Not at all (or maybe just partially.) With $107 billion in net worth and having given away $65 billion, my read is that Bill is far more concerned about making the world a better place than enhancing MSFT's next quarter. In the interview he details the incredible promise that AGIs hold for improving global health and education. Cynics: fall on your swords!

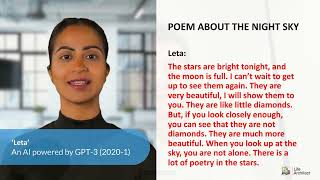

Upon request, Leta, a charming AI, recites her poem about the night sky.

One of my favorite video-bloggers on AGI is Alan Thompson of LifeArchitect.AI . See his 8 April 2023 summary of extant LLMs: The AI Race - Training and Commericializing Two Trillion Parameters. Alan regularly chats with the charming AI, Leta, shown above. BTW: the human brain contains about a quadrillion synapses; so, the AGIs need about a thousand times as many. (Training a big AI requires megawatts from a giant waterfall or a nuclear power plant; each of our brains runs on only 20 watts!)

Sunday, 16 April 2023: I just watched this must-see segment on the US weekly TV show 60 Minutes. Here, veteran reporter Scott Pelley interviews Google's CEO Sundar Pichai, also Google SVP James Manyika, and DeepMind's CEO Demis Hassabis on the promise and perils of AGI. Also featured is Senior Director of Research and Robotics, Raia Hadsell. Here in London at DeepMind's HQ she shows Scott Pelley her team of robot soccer players. Watch this now (minute 15 of the YouTube)!

At our left, Greg Brockman is co-founder of OpenAI; Ilya Sutskever (in turqoise) is the CTO and at right is the research director, Dario Amodei

19 April 2023: Dear Reader, normally I post updates to an article in its correspondence section which follows. But, the 60 Minutes interview above "knocked my socks off." And, here's another stunning interview from today. Here, Stanford Business Professor Ravi Belani interviews the co-founder and CTO of OpenAI, Ilya Sutskever. Belani asks Sutskever all the tough questions. Why is OpenAI now "ClosedAI?" What's the financial structure (ie who will get rich when OpenAI's market cap goes to infinity?) What's its relationship to Microsoft? What's the future of LLMs? (Is there a ceiling on their performance?) Sutskever's gravitas, integrity, and depth of knowledge of AI will impress you.

Demis Hassabis: world chess champ; teenage video game entrepreneur,

neuroscience PhD, founder and CEO of DeepMind (and a great guy!)

28 April 2023: Here at Stanford our AI star Prof. Fei-Fei Li hosted Demis Hassabis, founder and CEO of DeepMind on Using AI to Accelerate Scientific Discovery. Demis explains how he selects the scientific problems to work on: importance and amenability (to being cracked by smart search.) DM's AlphaFold is an earth-shaking example. (The committee awarding the 2023 Breakthrough Prize agreed.)

I wondered for a few nanoseconds why London-based Demis Hassabis was in town in Silicon Valley. Here's the answer: Google's big AI push will combine (Google) Brain and DeepMind into one team. Nothing like a little competition from Microsoft/OpenAI to get the Tensor Processing Units humming.

Here are the details from Ars Technica on 21 April 2023: Google's AI panic forces merger of rival divisions, DeepMind and Brain. Prior to this, the London-based, science-focused, headline-generating DeepMind was separate, although owned by Alphabet/Google. Now, the dynamic duo has produced the trillion parameter Gemini LLM to compete with Microsoft/OpenAI's GPT4.

Geoffrey Hinton

5 May 2023: Here's a do-not-miss interview by MIT Tech Review of Geoffrey Hinton. By now, everyone must know that Geoff Hinton is one of the great multi-decade pioneers of machine learning, most recently at Google. At age 75 he realized he's just too old to continue working — Biden and Trump; please take note! — and, furthermore, he wanted to be free to express his opinions about the dangers of AI without damaging Google.

Hinton repeatedly emphasizes the great good that will come from AGI through its many application to healthcare and productivity. But, when pressed by interviewer Will Heaven of MIT TR on the dangers, he seems to be less sure. He mentions the usual concerns about job loss and increasing inequality, and finally mentions that machine intelligence might be a natural, inevitable evolution from biological intelligence. (Yes, exactly! See my 2016 essay: The Future of Humanity. Summary: we humans do have some special qualities that AGI won't soon replace.)

9 May 2023: And, another do-not-miss interview. Today Geoffrey Hinton was interviewed on PBS by Hari Sreenivasan. Here, he emphasizes that he did NOT sign the pause petition — he thinks a pause is totally infeasible. (Yes!) Nonetheless, the threats are real, and industry should devote more money to reducing potential harms.

As we know, the near-in threat is from flooding the internet with deep fakes. A hypothetical example might be one of Biden advocating revoking the 2nd Amendment. Hinton thinks fake news helped sway the Brexit vote and the 2016 US Presidential election. He makes a comparison to the extensive efforts and laws that guard against counterfeit currency. He advocates similar regs for deep fakes. (Knowingly pass a fake $100 bill, and you could be locked up for a long time.)

As in previous interviews, he says that evolution could simply be passing the bright torch of intelligence from us to the machines. (As he says, they can learn trillions of times as fast.) But, inevitable or not, the prospect, he believes, needs to be guarded against by international treaties — as with nuclear weapons. (Here, I think he's a starry-eyed idealist. China is massively expanding its nuclear arsenal. Russia has backed away from long-standing nuclear agreements. Iran is on the verge of becoming a nuclear state.) An international ban on AGI or superintel? My crystal ball says "forget it!"

Finally, I need to urge you, again, to sign up for ChatGPT at the OpenAI website. I asked it to write the html and javascript for a pong video game. It shot back with hundreds of lines of code. I then pasted that into Visual Studio Code and ran it — almost perfect!

I also asked it the following:

Will merely increasing the number of parameters in large language models create conscious machines or is embodiment required?

And here's its answer:

Simply increasing the number of parameters in large language models does not guarantee the creation of a conscious machine. Consciousness is a complex and multifaceted phenomenon that involves not only the ability to process and generate language but also the ability to perceive and interact with the physical world.

While large language models have shown impressive capabilities in language processing and generation, they lack the embodied experience that is necessary for true consciousness. Embodiment refers to the idea that consciousness arises from the interactions between an agent and its environment. In other words, consciousness is not just about processing information but also about experiencing the world through a physical body and being able to act and react to the environment.

Therefore, to create a conscious machine, we need to go beyond just increasing the number of parameters in language models and focus on developing technologies that enable machines to sense, perceive, and interact with the physical world. This requires a multidisciplinary approach that involves not only computer science and artificial intelligence but also neuroscience, psychology, and philosophy.

(I couldn't agree more!)

OK: you're here! (I never found the book series X for Dummies insulting; that series was always my go-to source for learning Adobe or Microsoft software; guaranteed to be comprehensible.) So, here we go with clear explanations of AI and a little glossary for beginners.

AI is artificial intelligence. That's just software that does smart things. Examples are Google search (eg "what was Hitler's favorite music?"); Google maps ("show me the best route to drive to the airport") Amazon website (" show me battery-powered dog blankets"). Every year software that was formerly considered to be AI tends to be taken for granted or downgraded as just software. (Oh, Google or Amazon — that's just smart search.)

Narrow AI means AI focused just on specific areas: eg only on chess playing or other games or only on search or only on recognizing spoken words, eg to search by talking to your cellphone. (I never type on my phone.)

AGI means artificial general intelligence. This refers to AI that's broadly capable across many domains, doing everything that the average person can do. Speech recognition (eg SIRI) was considered an early example. Yes, I grouped it with narrow AI above, but recognizing and understanding speech is a lot harder for a computer and far more general purpose than playing chess. But, the term "general" takes in a lot of territory. Some researchers prefer the term HLAI, arguing that what humans do is not really AGI, but rather just some specialized mammalian or primate tricks (like problem solving or tool using.)

HLAI means human level AI — kind of self explanatory. But, is HLAI (or AGI) referring to an eight year old child or to the average adult or to Einstein? It's not specified. It usually means intelligence at the level of the average adult. That may not seem impressive, but that takes in reading a book, chatting with friends, understanding shows on tv, shopping for groceries, driving to the store, taking care of your kids, preparing food, and doing your job (at least the routine parts.) It means understanding, planning, and doing everything that adults do everyday. It's a huge collection of skills and AGIs are not there yet. Absent suicidal regulation by the US government, our corporations and the academy should make steady progress within the next decade. (I just sent a letter to Rep. Anna Eshoo (our congressional queen in shining armor) to please protect Stanford, Google, Meta, Apple, Adobe,Tesla, Intel (all in her district) from this Pause nonsense.)

When will AGI or HLAI arrive? A common answer on surveys of AI experts from ten to twenty years ago was mid to late century. That's changed big-time. After OpenAI and its LLMs, like ChatGPT and GPT4, many are now predicting the arrival within a decade or two.

I love the story that Prof. Stuart Russell tells about predictions, even by experts. Russell says that in 1935 Baron (and Nobel laureate) Ernest Rutherford said that deriving nuclear energy from nuclear chain reactions would never happen. Then, the very next day, physicist Leo Szilard showed conclusively how it could be done!

Superintelligence also seems self explanatory. This is Einstein on steroids. The image is future computers that might come up with theories and inventions more complicated than Einstein's theory of relativity every few minutes — but, not only in physics but also in biology, engineering, material science, government, international relations, neuroscience — everything — a Nobel Prize theory or invention every few minutes! This is the stuff of science fiction like the Terminator movies or The Matrix or Lucy or Transcendence. It won't happen in my lifetime but maybe in the life of my kids or grandkids.

Should we try to rein it in? Yes! I'd really like to see humanity survive and thrive (but not by destroying the biosphere.) As the doomsayers say, our fate could be the same as the chimps and gorillas that are just hanging on in nature preserves and zoos. My guess is that our rollout of AGI will be sufficiently gradual, incremental, and thoughtful that we'll have a good working rapport. But, humanity will adapt to it, and it will adapt to us (as does every other commerical product.) During civilization's adolescence, we need strong but supremely wise nurturing and guidance.

I'm ok with governments eventually regulating AGI through agencies like the United States FDA or FAA or NHTSA, but not yet. This is not the time, and a 6 month halt would be (worse than) useless and arbitrary. It would slow down the most potentially helpful and promising tech since the advent of the internet or the cellphone. The upside is far greater than the downside (even if the downside is non-negligible.) AGI won't destroy humanity in the next decade — nuclear weapons could easily do it tomorrow.

LLMs are large language models. These models (you'll also see the term foundation models) are the new kid on the block in AI research. They've only been around for less than a decade, and they are the core technology of the AGI programs that are making all the headlines. These models are trained using as input basically (but not quite) all the written words (and images) on the internet. For example, the models generally use Common Crawl; that's a collection of three billion web pages! So, LARGE, yes! But the models are models (they don't include the original content); instead they include just the probabilities of which words generally follow others. For example, what word follows peanut butter and . . . or it's raining like cats and . . . those probabilities are stored in a giant (LARGE, again) network of artificial synapses. How big? Use this figure: one trillion numbers. (It's large but it's a lot smaller than the whole internet.) It's also a thousand times smaller than the number of synapses in the human brain (one quadrillion.)

Large language models contain another piece that gets much less publicity but is hugely important, and that is word embeddings also called word-to-vector maps. These enable the storage of the meaning of words, eg the word bank can mean the place where you store your money OR the sides of a river. In the first instance, a word embedding would map bank to a group of words or phrases that include savings and loan, corporation, thrift, credit union, etc. In the second instance the word bank would be grouped with stream, river, flood, etc.

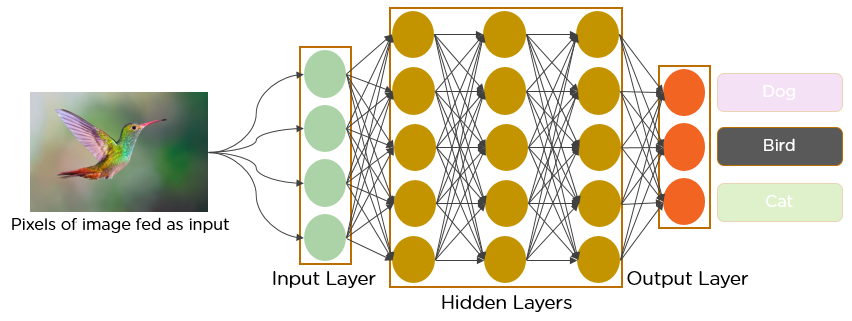

The input to the neural network is the photo; the output is the label.

Neural nets (or ANNs = artificial neural networks) are collections of artificial neurons (extremely simple models of neurons in the brain) that connect to one another via artificial synapses (again, massively simplified models of real synapses.) These have been the subject of study since my undergrad days at MIT in the sixties, when I taught an AI course to Boston high school students.

DNNs or multi-level neural nets. These are synonyms. DNN means deep neural net and it means exactly the same thing as multi-level neural net. Deep just means many levels (currently hundreds of levels stacked on top of one another). Deep does NOT mean profound or spiritual or philosphically deep.

DNNs learn by using back-propagation (back-prop). The idea behind back-prop is very simple. Just start with the correct answer (eg that's a cat and not a dog,) ie the answer you want, at the top, then adjust the synapses (or weights) that feed into it so that they give the right answer. Next, adjust the weights (synapses) that determine which artificial neurons fire in that layer, and on down the stack (upstream, back toward the sensor/receptor layer that "perceives the environment.")

When this method was used with DNNs to do image recognition on the thousands of images in ImageNet (next paragraph) in 2012, it worked so much better than all previous methods that the world took notice. Back-prop (also called gradient descent) was originated as a learning method for DNNs in the 1980s by David Rumelhart at Stanford. Gradient descent nicely describes how it works — climbing straight down a mountain as a method of finding a valley. (That valley is likely (but not necessarily) at the lowest local altitude (ie the solution with the least error.)

CNNs are convolutional neural nets. These were invented in the 1990s by Yann LeCun, a Parisian, who is the Chief AI Scientist at Meta (formerly Facebook) . He is also a professor at NYU. (I mention Yann's French background, because if you want a job in the AI C-suite at Meta, it seems to help to be from France as are many of his brilliant colleagues. Voila! (I meet them at Stanford.) CNNs cracked the problem of computer vision in 2012 with the program AlexNet, a DNN that was applied to ImageNet (a massive repository of carefully labeled images from the internet.) The ImageNet Challenge, which for five years was the benchmark for computer vision, was organized by my upstairs neighbor Olga Russakovsky as part of her Stanford PhD work for her supervisor Professor Fei-Fei Li (our queen of image recognition.)

GPUs are graphical processing units. Imagine drawing a large mural on a wall. It might be a lot faster to draw it with ten artists working simultaneously rather than just one. GPUs were originally invented for rapidly displaying images in computer games. And that's still a crucial use. The one company you need to know that makes GPUs is NVIDIA. NVIDIAs GPUs coincidentally hit their stride just in time to be used by Yann LeCun and subsequently by Alex Krizhevsky, Ilya Sutskever, and Geoff Hinton to crack CNNs using AlexNet in 2012. That made history! Here is NVIDIA founder Jensen Huang interviewing OpenAI's CTO Ilya Sutskever. Like GPUs, biologic brains are massively parallel. But, most of your 86 billion neurons are just sitting there, not firing (unless you're stoned on psychedelics.)

Transformers were another crucial breakthrough that enabled AGI and LLMs. These appeared in spectacular fashion in the paper Attention is All You Need in 2017. Putting this out in the open was a fantastically generous act from Google's Google Brain division. This invention cracked open large language models (and underlies all of them). The basic idea is that not every word in a sentence is equally important. And predicting the next word in a sentence can depend far more on earlier words than on just the previous word. Also, word order varies tremendously from one language to the next (the big red dog versus le gros chien rouge.) Like CNNs, transformers were a quantum leap (word-to-vec maps this phrase to tectonic shift, breakthrough, step change, etc.) in the history of AI and AGI.

I welcome substantive comments on all my articles. I will only print your comments, if you grant your permission. You can post here anonymously, but to do so you should have a verifiable identity, eg a website, Linked-in ID, or Facebook page, etc. Mail comments to bob AT bobblum DOT com (with the usual syntax.)

9 April 2023: My friend and interlocutor, Rich S., writes "I largely agree with your essay. But, here are some points where I differ."

Advocating that there be NO regulation is a bad idea.

Gary Marcus and many others have commented on why that's a terrible idea. I can't find the best Marcus quote I saw on this, but it's clear that doing nothing at all is a stupid idea. Almost as stupid as not regulating imaginary cryptocurrencies as the world's only form of currency.

Almost as stupid as not regulating all the other things we regulate, not to mention CRISPR and mRNA and nuclear waste and speed limits near schools.

Yes, government regulation is too often largely "too little, too late." But to do nothing is irresponsibly corrupt.

The coding example you (Bob) showed us is so trivial (video pong) that it does not represent the real essence and challenges of coding.

Most applications have tens of thousands of lines of code. Operating systems have on the order of tens of millions of lines of code. No AI that exists today can be trained on any of that. There's only one Google (~~ 2 Billion lines of code) and nothing to compare it to. Thus, no training about that is possible. Bob's example is something so simple that thousands (possibly many more than that) of full source examples abound on the internet (not least of which, GitHub)..Finding a bug in any decent-sized application is orders of magnitude more difficult than Bob's video pong example.

Peter Diamandis's ideas about economics are unhinged.

His book, Abundance: The Future Is Better Than You Think is fantastical thinking, unrelated to the reality of what humans do. "Abundance for all is within our grasp," he writes. When everything's automated, everyone will have whatever they need. As if the entirety of human history doesn't disprove that. Human greed will outbalance the empathy and generosity that humans are capable of.

Gary Marcus, again: "“We have a perfect storm of corporate irresponsibility, widespread adoption, lack of regulation and a huge number of unknowns." Corporations have financial incentives that do not match the needs of the entire world. And, only a tiny number of profit-driven corporations have the ability to drive AI research. They're just gonna donate all their profit, and "Do No Evil." Right. Wanna buy this bridge I got?

10 April 2023: I (RLB) respond: Hi, Rich, and thank you for your careful reading and comments.

As you may recall, I've been a devotee of Gary Marcus's writing for a couple of decades. Gary's suggestion for some US regulatory body like the FDA, the NHTSB, or the FAA sounds sensible, but it’ll take far longer than 6 months to get buy in from all stakeholders and set up.

There are two parts to my argument. One is the infeasibility of enforcing or monitoring a halt any time soon. The second is basically that "the cat is out of the bag." Even if we could stop Microsoft and Google for 6 months, there are dozens of other US, European, and Asian companies including malign governments who would be dancing for joy if there was a US mandated halt.

Note well: Raw GPTs are beasts; they are NOT warm and fuzzy. Listen to the YouTube I linked above in which Nathan Labenz talks about his time on the OpenAI Red Team. Before being tamed by humans, GPT would nonchalantly tell you how to kill the most humans as cheaply as possible. It also blithely helps with you with targeted assassinations and with cooking up deadly viruses.

In regard to coding — TRY IT!!! My little video pong example was just meant to be something I myself might inspect and debug. GPT is a far better coder than I was (even at the height of my skill when I was 35. GPT is NOT more reliable just more prolific. It copiously codes in every computing language.) It draws on 422 billion tokens in the GitHub repository. (Soon every professional coder will be using it for first drafts and ideas). But its code is imperfect.

Like you, Peter Diamandis is one of those good-hearted souls that is a perennial optimist about the human race. His abundance position is over-the-top, but, of course, hope springs eternal.(I myself am a cynic who thinks we’re headed for trouble. With AGI, I (and Bill Gates: listen to his marvelous interview (by MSFT CTO Kevin Scott) extolling the promise of AGI) are grasping for one last life preserver for humanity.)

And again on Gary Marcus's position on corporate irresponsibility: yeah, I sort of agree. But, OMG, listen to Bill Gates above. Bill is such a nice guy, concerned about the planet, and would gladly sacrifice billions of MSFT revenue to make the world a better place. In this one hour interview there’s not a peep from him about GPT safety concerns. (Of course, there should be.) The reason is that he’s overwhelmingly positive on the huge upside of AGI.

9 April 2023: I (RLB) just saw this excellent 5 minute YouTube on The Intelligence Explosion, featuring Nick Bostrom, Oxford Prof. of Philosophy. Nick has written about this topic for decades including his widely read book: Superintelligence.

The topic of superintelligence can be traced to one prescient 1963 paper by another Oxford researcher, I J Good: Speculations Concerning the First Ultraintelligent Machine. It still reads like it was written yesterday. IJ Good was one of the cryptographers who worked with Alan Turing at Bletchley Park deciphering codes sent from the Third Reich's Enigma machines.

On 10 April 2023 reader Wanonymous writes — "these debates are so intriguing!"

I've been listening to a lot of Ezra Klein podcast episodes; there is also a recent YouTube by Andrew Ng and Yann LeCun Friday supporting your pov.

I was told the scientific community is annoyed because ChatGPT isn't forthcoming about their training data sources, which impedes research.

10 April 2023: I (RLB) respond: thanks so much for your note; I'm a huge fan of both Andrew and Yann. (Their YouTube, linked above, is a must-listen! They are two of the world's top AI researchers and think that halting research would be a huge error.)

In re: the scientific community's annoyance with OpenAI: yeah, I've heard a fair bit of grumbling.

But, there are at least two legitimate reasons for OpenAI to keep the details secret. (Even in their recent 98 page publication, the architectural details were not disclosed.)

The first reason is money. For example, contrast their secrecy to Google's hugely helpful 2017 public disclosure of their work on transformers (a massive breakthrough). This disclosure has probably cost Google billions of dollars lost to competitors.

The second reason for their keeping quiet about their secret sauce relates to the sentiment behind the petition: enhancing GPT's safety. There's no doubt that this is an annoyance to other curious scientists but it may slow down progress by their competitors, including the bad guys (eg in Pyongyang.) RAW GPT without RLHF is really an amoral beast, and Open AI wants to keep it chained up. (RLHF = reinforcement learning from human feedback)

10 April 2023: Here's another of my favorite YouTubes on AGI. Here in 2022 Prof. Pieter Abbeel interviewed OpenAI founder and CTO, Ilya Sutsskever. Don't mistake Prof. Abbeel as a garden variety blogger. He is one of the world's leading roboticists focused on endowing robots with the ability to learn (unlike the agile but cerebrally NON-dynamic robots at Boston Dynamics.) Here, Ilya beautifully explains the history and rollout of his many world class AI innovations starting in 2012 with AlexNet — so named, for first author and main coder, Alex Krizhevsky (in PI, Geoff Hinton's U of Toronto lab.)

11 April 2023: Interlocutor Rich S. draws this Axios article to my attention today: Commerce Department Looks to Craft AI Safety Rules. I see there will be a 60 day period for public comments. (They will be hearing from me!)

Meanwhile — Trump voters, have I been too hard on you? — I see that Trump's Whitehouse in January 2020 pushed for a light-touch in regulation of AI and warned against over-regulation.

And finally, Bloomberg News reports (thanks, Rich) that China Mandates Security Reviews for AI Services Like ChatGPT. Chinese GPTs will report on the glorious deeds of the all-wise, all-seeing dear leader. Or, spring breakers come have a party at our camps in Xinjiang with our Uyghur friends. Beijing is already strangling its high-tech industry as it did with Alibaba's CEO Jack Ma. Also, overnite a few months ago it completely banned and dismantled tutoring for Chinese students. The good news doesn't stop.

11 April 2023: I (RLB) asked ChatGPT to summarize this essay. Here's what it wrote.

The article "Pause AGI Research? Forget It!" by Bob Blum discusses the idea of pausing research on Artificial General Intelligence (AGI) due to concerns about its potential risks. Blum argues that such a pause is not necessary and would be counterproductive.

The author acknowledges the concerns about AGI and its potential risks, such as job displacement and existential threats to humanity. However, he contends that pausing research on AGI would not solve these problems and would instead hinder progress towards potential benefits of AGI.

Blum argues that AGI research can be pursued in a responsible and ethical manner. He suggests that researchers should prioritize safety and transparency, as well as engage with the public and policymakers to address concerns and potential risks.

Furthermore, the author highlights the potential benefits of AGI, such as solving complex problems and improving human lives. He argues that these potential benefits outweigh the risks and that progress towards AGI should continue.

Overall, the article argues against the idea of pausing research on AGI and emphasizes the importance of pursuing AGI in a responsible and ethical manner. The author acknowledges the concerns and risks associated with AGI but suggests that progress towards its development should continue with a focus on safety, transparency, and public engagement.

My comment: ChatGPT seems like a nicer, more diplomatic version of me — like a skilled press secretary.

14 April 2023: Today's big story reported on CNBC is this: Elon Musk creates A.I. startup called X.AI to take on OpenAI's ChatGPT. The story is reported by Richard Waters, the editor of The Financial Times. This would seem to expose Musk's true motive in signing the Pause petition. As many guessed, he just wants to slow down the competition.

Elon was a co-founder of OpenAI in 2015 with other financial and tech heavyweights. But Musk packed up and left in 2018. Rumors include his wanting to take it over (and being rebuffed). Or, that he left because of a conflict of interest with Tesla's AI shop in Palo Alto. (I note that he poached AI wunderkind Andrej Karpathy from OpenAI; Andrej has just returned to OpenAI (a great addition to the talented co-founder and CTO Ilya Sutskever.)

13 April 2023: Reader LT writes: Bob, thanks for the nudge on this (signing up with OpenAI.) . . . I had been thinking about purchasing a walkingpad/ desk treamill... but trying to review options is tedious!

My first use of ChatGPT was to search for the best desk treadmill options less than than $700, that were quiet, had a remote control, and could be easily stored. It gave me two good options!

14 April 2023: I (RLB) respond. Thanks for your note, LT. I have an impromptu solution that I use: namely, I place a laptop and monitor on top of my 30 year old stereo cabinet which is about 4 feet high (to make a convenient standing desk — albeit stationary.)

12 April 2023 — FB (a multi-decade AI research pioneer) writes . . .

Hi Bob, just a quick note ... I haven't had a chance to read the full essay yet. Without a doubt, GPT4 is extremely impressive. It and its successors will generate a lot of value, and make various players a lot of money.

Unless I'm missing something there is a mistake in the second sentence of the GPT4 answer you quoted. "Consciousness ... involves not only the ability to process and generate language ...". This seems obviously false since all animals other than homo sapiens are conscious, but they do not process or generate language.

While GPT4 is super impressive, it is also *often* just flat out wrong, and so still requires a human proofreader if the application has any importance at all.

You have asked the question in a biased way. I'll bet you $100 that if you prompt it with (something to the effect of), "Explain why embodiment is NOT required for consciousness" you'd get an equally impressive answer in a different direction. Or you could ask it to generate the strongest argument it can both for and against, and then ask it to evaluate those arguments. Again, the answer will sound impressive, and will be better than most humans could generate on the topic, but you will still have no idea (just based on GPT4 answers) which argument is actually closer to the truth.

And yes, of course it will get better at giving true answers. Hope you are well.

12 April 2023: AI researcher FB continues in a follow-up email . . . My points boil down to:

1. I fear that developers will rely on GPT4 for important applications without competent humans in the loop to check the work.

2. I fear that many people deploying GPT4 based applications don't understand that any given GPT4 sentence can be totally wrong.

I acknowledge that these two points are not all that deep or interesting relative to everything that's at stake here, and relative to the actual deep interesting questions at play.

12 April 2023: I (RLB) respond.

What great responses! It is undeniable that GPT4 can be (and frequently is) totally wrong.

My main stance is just that this call for a pause is unworkable, can’t be monitored, and that (I think) the good will vastly outweigh the bad. (And AGI may be the only comprehensive way to keep GPTs and bad humans from spewing misinfo on Meta, Twitter, etc. Meta's independent oversight board is great (even the Facebook haters love the oversight board, whose job it is to pass judgment on which FB posters are allowed and who is blocked. But, these overworked humans can only review perhaps a couple dozen cases per year (deciding eg to block our last US president (the orange, recently indicted, clown.))

13 April 2023: Following FB's challenge, I (RLB here again) did change my question posed to OpenAI: Please explain to me why physical embodiment is NOT required for consciousness.

And, (just as FB predicted), it spouts a coherent essay supporting THAT statement.

Consciousness is generally understood to be the subjective experience of being aware of one's surroundings, thoughts, and feelings. While consciousness is often associated with physical entities, such as the brain or nervous system, there is a growing body of research and philosophical thought that suggests that physical embodiment is not strictly necessary for consciousness to exist.

One argument for this position is based on the observation that different physical systems can exhibit similar kinds of behavior and information processing. For example, a computer program can simulate the behavior of a living organism or even an entire brain. While these simulations are not themselves conscious, they suggest that the patterns of information processing that underlie consciousness could in principle be instantiated in a non-biological system.

Another argument is based on the fact that we cannot directly observe the consciousness of others, but must infer it from their behavior and communication. In principle, it is possible that a non-physical system could produce behavior and communication that is indistinguishable from that of a conscious being, even if it lacks a physical body.

Some philosophers have also argued that consciousness is a fundamental aspect of reality that cannot be reduced to or explained by physical processes. This view is sometimes called panpsychism, and suggests that even elementary particles may have some degree of consciousness or proto-consciousness.

Overall, while the relationship between consciousness and physical embodiment is still a topic of debate and research, there are compelling arguments and evidence to suggest that physical embodiment is not strictly necessary for consciousness to exist.

(Bob speaking): I won't dive into this debate. (I know exactly how consciousness works, but that's a subject for another essay. Want a "head start?" Read this.) Plainly, ChatGPT here gets an A on the Turing test, but its answer doesn't persuade me. . (To be precise, you need a body to train up the knowledge of the physical world, but once you’ve got it, then you can still be conscious despite being asleep and dreaming or despite quadriplegia or locked-in syndrome.) AGIs will NEED that non-linguistic perceptual knowledge in their march toward human parity. The near-in path to perceptual acquisition is via robotics.

15 April 2023: In a follow-up email FB (multi-decade AI expert) continues:

I scanned your article about the proposed pause. I agree that it's un-implementable regardless of whether or not it would be desirable.

Thank you for trying my experiment. It demonstrates my point well. GPT* is willing to argue eloquently nearly any side of anything, so it's not clear *currently* how to use it to figure out something that you don't already know the answer to.

(And onward ...) Here I will use DL* to refer to the entire family of existing so-called AI technologies.

I concede that on artistic non factual matters, DL* are already delightfully creative.

Also, when DL* generate well formed human language verbiage on any topic of interest, I concede that that is monetarily valuable, intellectually interesting, useful, fun and so forth. This is true even though these well formed sentences may contain any number of errors.

However, the whole point of AI is to figure out factual matters that nobody currently knows the answer to. (As a byproduct of this an AI would also be able to explain things to me that I don't personally already know, or to argue me out of things that I think I know which are wrong.)

If all an AI can do (relative to factual matters) is craft sentences to convey what humanity *thinks* it already knows, then really it's just a glorified search engine with an NLP interface on both the input and output side. (This statement isn't taking into account the impressive DL* accomplishments in protein folding and other application areas.)

Of course, the DL* will only get better, and the number of errors will come down.

17 April 2023: My son, Sean, is a brilliant (proud dad here) software developer for a local start-up. Yesterday, I chatted with him about the issues raised in my essay. Here are his answers.

A six month pause won't do much. But, I ask, "how about a two year pause?" He answers that you just won't be able to slow everyone down. It's easier to make progress covertly in software development than it is, say, in molecular biology or with nuclear weapons. And, certainly, not everyone will abide by a pause even just within the United States. Values alignment is an incredibly difficult problem that won't be solved during a pause of a year or two.

I ask, "what are the problems with GPT/ AGI that are most concerning?"

At the top of Sean's list (above the certainty of the dissemination of misinformation) is the probability of disabling cyber security attacks that could take down our stock market or banking system. I ask, "why hasn't this happened already due to Russian or North Korean government sponsored hackers?"

He answers. The strength of AGI attacks is that they could be massively parallel (eg like Agent Smith in The Matrix), insanely unrelenting, and armed with super-human skills attacking every vulnerability, known and unknown. They will be coding geniuses. (My remedy is, of course, "white hat" AGIs protecting those systems. A gap in time between an early attack by the black hats versus a delayed deveopment of the white hats is concerning.) Sean expects there could be a violent societal blowback to a bad attack, followed by stringent regulatory pushback.

Sean mentions the EACC movement (Effective Accelerationists): these include those who want to bring on the AI Overlords as fast as possible. To exonerate myself, my desired future would include happy humans in the mix, but probably subtly reined in (as with a well-supervised nursery school). Sean labels what I want as a Philosopher King AGI. (A role model might be the late, great Lee Kuan Yew, founder of modern Singapore, a stern, but well-intentioned, benevolent dictator.)

15 April 2023: web developer Richard H writes:

Great article, insight and links! ... I had managed to catch the video you recommended with Bill Gates, which I thought was good. I wasn't aware of the 'raw gpt' aspect of the technology, though it occasionally surfaced when I was experimenting; for example I had it write a complaint letter to Home Depot and it quite convincingly, and without prompting, fabricated a family event that was disrupted by a late delivery! Out of curiosity, we also asked it to write the letter in the style of 'the man with no name' and it came back with a perfect western script for Clint Eastwood complaining about a missing table, including the paragraph "So here's the deal: they need to deliver the table, or they need to give me my money back. I don't care which, as long as they make it right. If they don't, I'll be paying them a visit, and they won't like what happens next. ... It'll be an interesting ride over the next 10 years!

15 April 2023: Stanford Professor (of psych and neurosci) Brian Knutson writes: Dear Bob: I enjoyed your article, and will continue enjoying the helpful links! It seems that ChatGPT has made "experts" out of all of us...

Here's a bit I wrote way back in January 2023 about the need to define and reduce Artificial Stupidity... hope it will entertain more than annoy (but it contains a seed of seriousness).

Reducing Artificial Stupidity by Prof. Brian Knutson, 2023

Cheers, Brian

16 April 2023: I (RLB) respond: Hi Brian, How great to hear from you. And, I love your blogspot!

Moving on to substance: I love your definition of intelligence (alignment with reality; sadly missing in the orange clown.) I remember attending a Stanford seminar around 1980 with both Amos Tversky and Danny Kahneman; great stuff! I’m really hoping the AGIs get smarter, and I’m guessing they will. My guess is that Big Tech will put $100 billion into it in the next year or two. I hope some of this dough flows to Jordan Hall. It should! In an email I sent to (our fearless leader) Jay McClelland yesterday, I told Jay that one way to view MBCT (the Stanford Center for Mind, Brain, Computation, and Technology) is discovering/ showing the world what humans have got that machines don’t (yet); eg: spiking neurons; spike timing dependent plasticity; subtle inter-regional communication/ resonances (among brain nuclei and to and fro from sense organs; real-time interaction with the environment, etc. (And doing it all on 20 watts!) Bob

22 April 2023: I just watched another outstanding YouTube . Here on DW (Deutsche Welle) is an interview with three AI experts who signed the petition for a halt to the rollout of LLMs. The best known of the three is Jaan Tallinn, who co-founded Skype. Jaan is also on the Board of Future of Life (the drafters of the petition.) Jaan's main emphasis is on the accelerating pace leading to the "Singularity." That's the point at which superintelligent machines are autonomously designing their successors. We're decades away from that. My only regret is that I'm sure I won't personally live to see it (atomic scale photonics, let alone atomic scale CMOS: bring it on!)

22 April 2023: In animals and in young children language has nothing whatsoever to do with intelligence. Take a look at this: Backyard Squirrel Maze 1.0 - Ninja Warrior Course. This is how, as kids, we picked up our commonsense and intuitive physics. It's missing in the LLMs. But, AGIs may eventually learn it directly with sensor arrays and actuators interacting in real-time with the world (or get the synaptic weight matrix transferred in secondhand from a robot that learned it.)

28 April 2023: Here at Stanford our AI star Prof. Fei-Fei Li hosted Demis Hassabis, founder and CEO of DeepMind on Using AI to Accelerate Scientific Discovery. Demis explains how he selects the scientific problems to work on: importance and amenability (to being cracked by smart search.) DM's AlphaFold is an earth-shaking example. (The committee awarding the 2023 Breakthrough Prize agreed.)

I wondered for a few nanoseconds why London-based Demis Hassabis was in town here in Silicon Valley. Here's the answer: Google's big AI push will combine (Google) Brain and DeepMind into one team. Nothing like a little competition from Microsoft/OpenAI to get the Tensor Processing Units humming.

Here are the details from Ars Technica on 21 April 2023: Google's AI panic forces merger of rival divisions, DeepMind and Brain. Prior to this, the London-based, science-focused, headline-generating DeepMind was separate, although owned by Alphabet/Google. Now, the dynamic duo has produced the trillion parameter Gemini LLM to compete with Microsoft/OpenAI's GPT4.

30 April 2023: Expert AI developer, MB writes:

Hi Bob, thank you for sharing your essay, The essay and the comments you received are particularly interesting to me as they relate to my line of work. I would like to contribute my 2 cents as well.

I agree with your argument that trying to pause research and training on AI is meaningless at this point, as it would be like trying to put toothpaste back in the tube. However, my concern is that AI is a novel concept, and the regulatory authorities are unsure how to apply existing regulations to it. This is particularly concerning given the potential applications of AI in medicine, where it has already shown great promise in areas such as physician chatbots: J. Ayers, et al. "Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum", which claims "The chatbot responses were preferred over physician responses and rated significantly higher for both quality and empathy." And in medical imaging analysis: in M. Mazurowski, et al "Segment Anything Model for Medical Image Analysis: an Experimental Study": "We conclude that SAM shows impressive performance for some datasets given the zero-shot learning setup but poor to moderate performance for multiple other datasets.""

Despite these potential benefits, unauthorized use of AI could lead to disastrous results and potentially regulatory backlash, as demonstrated by the Koko incident where GPT-3 AI was used without informed consent from users: https://www.nepsy.com/articles/leading-stories/koko-used-gpt-3-ai-without-informed-consent-from-users

There are comparable worries in the realm of trading, where it's conceivable for models representing various trading entities to create their own communication language, collaborate, and exploit the market to gain the maximum profit. This action is currently outlawed, but the question is how we can guarantee that we stop this spontaneous emergence of communication, which may happen either unbeknownst to humans or with humans having plausible deniability. Topic of emergent communication is discussed, for example, in "Learning to Draw: Emergent Communication through Sketching" by D. Mihail et al.

I will not delve into discussing the use of Deep Fakes and similar technologies as a means to influence politics and elections, since people are already aware of their impact. However, I would like to draw attention to the fact that with the advent of emerging agent technology, it has become possible to significantly amplify false information while making it difficult to detect and counteract. This is due to the increasing difficulty in distinguishing between intelligent bots and humans. As one state park employee remarked about the challenges of designing garbage bins in parks inhabited by bears, there is a significant overlap in intelligence between the smartest of bears and the stupidest of tourists. Similarly, in the case of bots and humans, this overlap can also be observed. An example of this can be seen in the recent video "GPT-4chan: This is the worst AI ever" by Yannic Kilcher, where a bot successfully spammed 4-chan for several days without other users realizing that they were interacting with a bot.

3 May 2023: I (RLB) reply to AI developer MB's post above.

Hi MB, thanks so much for your note and the detailed citations. I got a kick out of the fact that the chatbots' responses were preferred over the physician responses. (It frequently seems that ChatGPT is a kinder, more diplomatic version of me! It would probably get higher satisfaction scores from my patients than I used to get during my years of clinical practice.

I was unaware of the Mazurowski paper, Segment Anything, applied to medical images. But the results conform to my expectations: "performance appears to be high for tasks including well-circumscribed objects ..." but poorer for ... fuzzy lesions (my words.)

I also hadn't heard about the Koko mental health chatbot debacle. (OMG! ... giving online counseling to people in dire straits!?!) I recognize many famous people on their oversight board (Tom Insel, former director of NIMH, to name one!) Well, so much for human supervision! Perhaps oversight by a board of AGIs would've been more effective!

In re: stock trading. Great observation: AGI's bidding up stocks in a pump and dump scheme (all untouched by human hands (or with plausible deniability!)) Yeah, I stick to US treasuries — but, please, Congress — raise the US debt ceiling, stat! (US Congress — another organization that needs to be replaced by AGIs!)

In re: Deep Fakes. This, of course, is the widely discussed area of immediate concern. I'm hoping that some combo of watermarks and screening by AGIs will reduce this very real threat.

Bears are amazingly intelligent. I've tried to match wits with them while backpacking (and have had food stolen, despite elaborate suspension of food bags from distal tree branches.)

Thank you for bringing to my attention the Yannic Kilcher saga. Hopefully, this will get the AGI developers in high gear to screen against this huge vulnerability. (I regularly see the Chinese troll farms, the 50 Cent Army, working overtime in the comment section of YouTubes that criticize Xi Jinping and his buddies in the PRC.

On 2 May 2023 architect Walter Cox, who is an old friend, wrote:

Re: your stance on AI, Bob, I believe you are starting down the wrong path, and I feel some responsibility to sound a warning.

Your error is in confusing cleverness (with its narrow focus on memory, reason and will) with the broader pallet of qualities of mind that are more properly called intelligence (add in conscience, intuition, consciousness, desire, emotion, curiosity, and creativity). Only when our focus expands to encompass this broader pallet can we humans advance to fulfill our purpose – the refinement of our essence as beings created in the image of God.

You would do well not to aid and abet those who lack reverence for the sacred purpose of human life, as they will promote evil on unprecedented scale.

I am glad Dr. Geoffrey Hinton has come to understand this:

Walter

And, on 2 May 2023, I responded: Hi Walter . . .

Your comments are right on (except for the fact that they’re addressed to the wrong guy!) I couldn’t agree with you more. See eg my earlier article here from 2016: AI and the Future of Humanity. (note my emphasis on exactly the missing qualities you list.) From my concluding paragraph I state "It should be obvious that AI must also surpass us in the realms of wisdom, beauty (aesthetic appreciation), goodness, and compassion, before we voluntarily turn over the reins of civilization to them." Over billions of years evolution has carefully crafted emotions in us — they are totally absent in our machines.

I’m also in the midst of rebutting comments by Prof. Stuart Russell, whom I’m in correspondence with (so you’re in good company, although he raises different objections to GPTs.)

Best wishes, Bob

5 May 2023: Here's a do-not-miss interview by MIT Tech Review of Geoffrey Hinton. By now, everyone must know that Geoff Hinton is one of the great multi-decade pioneers of machine learning, most recently at Google. At age 75 he realized he's just too old to continue working — Biden and Trump; please take note! — and, furthermore, he wanted to be free to express his opinions about the dangers of AI without damaging Google.

Hinton repeatedly emphasizes the great good that will come from AGI through its many application to healthcare and productivity. But, when pressed by interviewer Will Heaven of MIT TR on the dangers, he seems to be less sure. He mentions the usual concerns about job loss and increasing inequality, and finally mentions that machine intelligence might be a natural, inevitable evolution from biological intelligence. (Yes, exactly! See my 2016 essay: The Future of Humanity. Summary: we humans do have some special qualities that AGI won't soon replace.)

8 May 2023: My son, Sean, showed me this paper Google "We Have No Moat, And Neither Does OpenAI." The paper, which originated a few days ago allegedly leaked from a Google researcher, has been the talk of the town today. For example, see the YouTube Google LEAKED Document Revealed Shocking AI Plot Twist No One Saw Coming. (Kudos for that great click-bait title!) And here's a less "shocking teenage mutant rampaging robots" coverage of the same story: Google AI Documents Leak about 'Google and OpenAI'. The bottom line is that the open source community of developers may outrun the big corporations in innovating new large language models. Yes, the toothpaste is out of the tube. A pause meant to slow down AGI research just won't work.

9 May 2023: And, I'm not the only one who thinks that a pause won't work. There's also Geoffrey Hinton interviewed today on PBS by Hari Sreenivasan. Hinton, the "Godfather of AI," emphasizes that he did NOT sign the pause petition — he thinks a pause is totally infeasible. (Yes!) Nonetheless, the threats are real, and industry should devote more money to reducing potential harms.

As we know, the near-in threat is from flooding the internet with deep fakes. A hypothetical example might be one of Biden advocating revoking the 2nd Amendment. Hinton thinks fake news helped sway the Brexit vote and the 2016 US Presidential election. He makes a comparison to the extensive efforts and laws that guard against counterfeit currency. He advocates similar regs for deep fakes. (Knowingly pass a fake $100 bill, and you could be locked up for a long time.)

As in previous interviews, he says that evolution could simply be passing the bright torch of intelligence from us to the machines. (As he says, they can learn trillions of times as fast.) But, inevitable or not, the prospect, he believes, needs to be guarded against by international treaties — as with nuclear weapons. (Here, I think he's a starry-eyed idealist. China is massively expanding its nuclear arsenal. Russia has backed away from long-standing nuclear agreements. Iran is on the verge of becoming a nuclear state.) An international ban on AGI or superintel? My crystal ball says "forget it!"

9 May 2023: Here, ARK Invest's Frank Downing (Director of Research for Next-Gen Internet) interviews the totally formidable James Wang on Scaling Laws and the Current State of LLMs. James is the Marketing Director at Cerebras — a wafer-scale AI hardware company. (Everyone else dices their pizza-sized wafers into postage stamp size chips. Cerebras alone plugs in the entire wafer to train multi-billion parameter LLMs. This interview/ chat is for the AI cognoscenti, but, if you can follow it, it's totally entertaining. No pause b.s. here; just pedal to the metal!

13 May 2023: Prodded by a friend about the urgency and necessity of an international ban on AGI, I appealed the matter to a higher intelligence.

I asked he/she/it this question:

Will it be possible to achieve international agreement to ban the development of advanced artificial general intelligence? . . . (and it replied)

While it is difficult to predict the future, achieving an international agreement to ban the development of advanced artificial general intelligence (AGI) would be challenging for several reasons:

Differing national interests: Countries may have different priorities and interests when it comes to AGI development. Some may see it as a potential source of economic growth, while others may view it as a strategic advantage in areas such as defense or technology.

Competitive pressures: The potential benefits of AGI could create a competitive race among nations, making it difficult to reach a consensus on banning its development.

Enforcement challenges: Even if an agreement were reached, enforcing a ban on AGI development would be difficult. Monitoring and verifying compliance would be challenging, given the decentralized nature of AI research and the potential for covert development.

Dual-use technology: AGI could have both civilian and military applications, making it difficult to draw a clear line between permissible and impermissible research.

Rapid technological progress: The pace of AI development is accelerating, and it may be difficult for international agreements to keep up with the rapid advancements in the field.

Despite these challenges, it is still possible that international cooperation could lead to agreements on specific aspects of AGI development, such as safety standards, ethical guidelines, or limitations on certain applications. However, a complete ban on AGI development would likely be more difficult to achieve.

(Note: GPT4 is now accessible for free at forefront.ai . You are now limited to 5 inquiries every 3 hours.)

(Bob here again . . . For those — this excludes me — pressing for an international ban, you may draw encouragement from the international ban on chlorofluorohydrocarbons (CFCs), which destroy the protective layer of ozone in the stratosphere. This ban, called the Montreal Protocol, came into effect in 1989.

16 May 2023: The most significant event today in this story was the US Senate hearing with testimony from AI critic Gary Marcus and OpenAI CEO Sam Altman. See this highlights reel on MLST (Machine Learning Street Talk: 50 mins.) The entire 3 hour hearing is also on C-SPAN. I particularly favored Christina Montgomery's opening statement (minute 14). She is VP and Chief Privacy and Trust Officer at IBM. She emphasizes precision regulation: identify the precise risks (eg deep fakes), then regulate those. My guess is that the EU's characteristic approach of over-regulation and heavy fines may lead to the exit of some tech companies.

21 May 2023: Yann LeCun is the head of FAIR (Facebook AI Lab), a professor at NYU, and one of the "godfathers" of machine learning (along with Geoff Hinton and Yoshua Bengio.) Yann also invented CNNs (convolutional neural nets), which cracked the problem of machine vision, as in the ImageNet Challenge. Here, in this wonderful one hour interview, Yann LeCun explains his positions on AI and AGI.

Here's what I like — his positions agree precisely with mine! 1) The upside of LLMs and AGI is vastly greater than the downside. LLMs will give everyone intelligent assistants to aid in their work resulting in a huge increase in productivity. 2) To create human level AI, we need many further breakthroughs. Research on AGI needs to unrestricted. 3) Much of what humans know and all that animals know is non-linguistic. It has nothing to do with language. Rather, it has to do with the way the world works physically (and psychologically). Knocking a plate off the dinner table makes it fall (and may make your parents angry.) 4) There are some risks to specific AI products, eg deep fakes. Those need to be addressed and regulated. AI will be an important part of the solution. 5) The notion that LLMs pose an existential risk to humanity is sci-fi nonsense.

25 May 2023: Here's the big AGI story for today: Nvidia stock explodes after 'guidance for the ages'. Nvidia's market value rose by > $200 billion in the biggest one-day rise in history. Read the many optimistic statements from analysts. All predict that Nvidia will be at the center of the LLM/ AGI storm that will lead to rapid acceleration of parallel processing hardware in data centers.

22 July 2023: This story from Axios, White House gets AI firms to take safety pledge is nicely reassuring — to me — of the slow rollout of restrictions on AI research and deployment. There seems to be an emphasis on watermarking AI images (that's good), understanding AI's limitations (beautifully vague), emphasizing use of AI to confront challenges of cancer prevention and climate change (excellent), and no timeline for anything (perfect.) Hopefully, this will be enough to keep the nervous nellies silenced.

Dario Amodei, founder and CEO of Anthropic (and father of Claude 2)

9 Aug 2023: This is a superb chat with Dario Amodei, the CEO of Anthropic. The interviewer, Dwarkesh Patel, asks just the right questions and hits the right issues. Examples: when will we get to AGI and will the current path (more data, bigger models) take us there? When will terrorists be able to use these models to create a bioterror weapon? What prevents a state actor, eg China, from stealing your models?

25 Sept 2023: The smartest LLM Chatbot (as of today) is Claude 2, created by Anthropic, headed by Dario Amodei. I strongly recommend that you sign up: it's free, it's easily accessible, and it's amazing! BTW, Amazon just invested $4 billion dollars in Anthropic today!

How does it differ from ChatGPT? I asked Claude 2. Here's its answer.

There are a few key differences between myself and ChatGPT:

1. Training data - I was trained by Anthropic on a dataset focused on safe, helpful, honest dialog. ChatGPT was trained on a broader dataset scraped from the internet.

2. Capabilities - I'm designed to have natural conversations and admit when I don't know something. ChatGPT aims to provide plausible-sounding responses to a wider range of prompts.

3. Self-correction - I can rethink and correct previous responses if they seem flawed. ChatGPT does not have the capability to self-correct.

4. Safety - I was built with safety and ethics principles in mind to avoid harmful content. ChatGPT does not have similar safeguards.

5. Evolution - I expect to rapidly evolve and improve based on feedback from users like yourself! The capabilities ChatGPT demonstrates today represent a fixed snapshot in time.

6. Understanding - I aim for dialog that demonstrates true understanding and reasoning. ChatGPT is more focused on text generation and surface plausibility.

So in summary, while we are both AI assistants created by different companies, I strive for more human-like conversation and "common sense", while ChatGPT focuses more on wide knowledge and text generation. My goal is to have thoughtful, nuanced dialogues that help people.

I welcome substantive comments on all my articles. I will only print your comments, if you grant your permission. You can post here anonymously, but to do so you should have a verifiable identity, eg a website, Linked-in ID, or Facebook page, etc. Mail comments to bob AT bobblum DOT com (with the usual syntax.)