AI Overlord or Tool?

In February 2011, when IBM's Watson defeated all time Jeopardy champions Ken Jennings and Brad Rutter, it joined a pantheon of iconic computer projects that have been milestones for AI. Here's a partial list.

1956 Arthur Samuel's Checkers Player

1971 Terry Winograd's SHRDLU : Language Understanding in Blocks World

1974 Harry Pople's and Jack Myer's INTERNIST , an internal medicine diagnostician

1975 Ted Shortliffe's MYCIN, a high performing, rule-based infectious disease expert system

1997 IBM's Deep Blue , a chess player that beat world champion Garry Kasparov

2000 ASIMO , Honda's deservedly famous android

2007 Stanford CSD’s Stanley, winner of the DARPA Grand Challenge robotic car race in the Mojave Desert

This victory by IBM's research team led by computer scientist David Ferrucci was an important milestone for AI. Beating the best human players at Jeopardy was a difficult engineering challenge that required harnessing several areas of AI: natural language processing (NLP), knowledge representation and reasoning, and parallel processing. Being a world champion at Jeopardy requires great accuracy, a reliable estimate of confidence, and incredible speed.

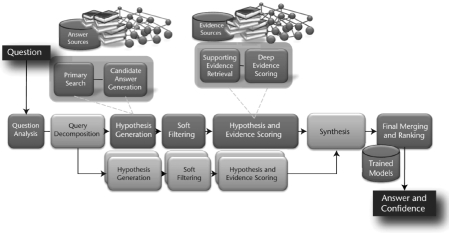

The best high level article on Watson was written by Ferrucci's IBM team , and appeared in AI Magazine in 2010. They described the evolution of their DeepQA architecture from earlier QA systems including IBM's Piquant and NIST's Aquaint.

I also recommend KurzweilAI's Amara Angelica's interview with IBM's Eric Brown .

The best tv show on Watson was this PBS NOVA, Smartest Machine on Earth. These brief videos about Watson on IBM's website are also excellent. And, if you missed the Jeopardy shows, you'll want to see this clip of the champs in a preliminary bout.

Here's what everybody wants: a system that (unlike a search engine) gives you exactly the right answer as rapidly and accurately as possible. How many cells are there in the human body? What causes cancer? Why is the rate of autism increasing? How would Sotomayor vote if Roe v Wade was challenged? Why does it get colder when you go up a mountain? What is the current status of string theory? Why did multicellularity take so long to evolve?

You can see why the problem is so difficult. The system must understand the question, decide what kind of answer would be appropriate, search the internet, and then display an accurate answer with the right supporting evidence. This is the holy grail that IBM was trying to tackle with DeepQA, and Jeopardy was an excellent motivating task.

Below, I distill some key points on Watson, DeepQA, and the Jeopardy Challenge.

Below, I distill some key points on Watson, DeepQA, and the Jeopardy Challenge.

Ambiguity in Jeopardy Questions: Consider this Jeopardy question:

Category: LINCOLN BLOGS

Clue: Secretary Chase just submitted this to me for the third time; guess what, pal? This time I'm accepting it.

Answer: his resignation.

This Jeopardy question is a marvel of ambiguity. The indefinite pronoun this in submitted this is all that refers to the answer. Lots of things can be submitted: documents, reports, letters - and who is Secretary Chase? What does pal have to do with it or blogs? Who is the I in I'm accepting it, and what is being accepted? Even if Watson misidentifies the goal of an inquiry, it can still recover by keeping track of alternative hyptheses.

Memory and World of Discourse : Watson was not connected to the internet. Everything it used in the contest was stored in memory. Your home computer has at most 4 billion bytes (4 GB) of main memory (DRAM). Watson used 15 trillion bytes (15 TB) of DRAM shared by its 2880 cores. It used 1 TB of DRAM to store the equivalent of about 1 million 200 page books including encyclopedias, thesauri, dictionaries, movie databases, the Bible, and many other reference works. (The old estimate that human experts know about 50,000 facts is ludicrously low. Ray Kurzweil’s estimate of 1.25 terabytes may be closer but is just a rough guess.)

Level of Expertise in Jeopardy Champions: The best human players, like contestants Ken Jennings and Brad Rutter, are stunningly accurate, consistent, and fast. They answer perhaps 70% of the questions, and when they answer, they're right often 90% of the time. Furthermore, they're blindingly fast, frequently buzzing in within 3 seconds after the clue appears visually and within a few milliseconds after Alex Trebek reads it aloud. (Test your own reaction times here or here .)

Multiple Constraint Satisfaction: The heart of Watson and DeepQA is being able to score and rank-order thousands of possible answers (candidates or hypotheses) rapidly and accurately. Doing this well is a tradeoff between speed and accuracy. The IBM team uses a multi-tiered approach, first using quick and dirty methods to generate hypotheses, and then using increasingly compute-intensive methods to hone the list.

Consider the constraints that a final answer might have to satisfy. It must be correct in person, place, time in history and year, accord with all the facts, and accord with the logical structure of the question (ie its grammar and semantics). It may even need to satisfy special constraints like word rhyming (eg soccer locker for the question “where Pele keeps his ball” ) or spatial constraints (eg north of Beijing).

Multiple constraint satisfaction is a key part of human knowledge acquisition. We know when something’s not right. My favorite metaphor for this is fitting a jig-saw puzzle piece : it needs to fit on all sides.

Reliability of Evidence and Confidence: Host Alex Trebek laughed when Watson made a small but precise bet of $947 when it was winning $30,000. As a rational game player, Watson had optimized the expected value of its bet. How confident was it of its answer? How much would it lose, if it was wrong?

Watson uses multiple weighting schemes to assess its confidence. Bayesian probabilities are popular in AI, but DeepQA uses a variety of weighting schemes including Bayes to assess the accuracy of its answers. It must account for source unreliability and partial satisfaction of multiple constraints.

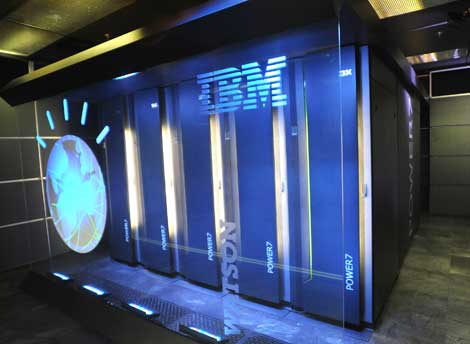

Hardware and Parallelism: The Watson team was using a single computer up to 2008 — answering a question took 2 hours. It now takes about 3 seconds . Here's the math: 90 servers with a total of 3200 cores (single processors working in parallel). 2 hours = 3600 seconds per hour * 2 = 7200 seconds. Divide by 3200 and you get 2.25 seconds .

Watson's ninety Power 750 servers give it a throughput of 80 teraflops - hefty but not outrageous considering that 20 petaflops supercomputers (250X faster) are under construction at Lawrence Livermore National Lab to come online in 2012.

Parallelism really helps. That's the brain's great strength. The human brain has over one million kilometers of wiring. That wiring (myelin insulation around axons = white matter) is most of the 3 pound weight of the brain. The corpus callosum (our big interhemispheric cable) alone contains two hundred million fibers. Your hundred billion neurons use only 20 watts of electricity and are liquid cooled. Compare that to the giant AC units required in the Watson machine room.

Common Sense: One of the longest running and best-funded AI projects of all time is CYC, an attempt by AI researcher Doug Lenat and his Cycorp team to capture all of common sense on a computer. Lenat estimates that CYC now contains 6 million facts. A fact might be that living things require water or that humans are mammals.

When Lenat was asked about Watson versus CYC, he said (I paraphrase) suppose there was a Jeopardy category goes uphill or downhill and the clue was spilled beer . Watson would utterly fail on this question. It would have no basis on which to answer. In contrast CYC would know that beer is a fluid, fluids are liquids, liquids usually flow downhill because of gravity.

The IBM team hand-encoded a lot of common sense knowledge into Watson, but that part of its database is tiny compared to what CYC has. (CYC has other crucial faults, but see Doug Lenat's defense of CYC in this video at Google HQ.)

Turing Test: The Turing Test is the most famous test for human intelligence in AI lore. It just means having a computer that fools people into thinking they're talking to another person. The Loebner Prize is awarded annually to the world's best efforts; however, current annual winners, though amusing, are not yet close to passing the Turing Test, which, I'm guessing, will happen within the next decade or two. (Do try out ELBOT, one of my favorite chatbots , to a get a feel for the state of the art.) Interestingly, Watson is too smart! If you were conversing with it, you might know you were talking with a superhuman. To pass the Turing Test, it would need to be dumbed down.

Consciousness: Even if an extended version of Watson passed the Turing Test, it would NOT be conscious. Consciousness and intelligence are quite distinct. In multicellular organisms emotion and consciousness arose hundreds of millions of years before human intelligence. Computers have been highly intelligent for decades back to Art Samuel's checkers playing programs in the 1950's. They have never been conscious.

Consciousness means perceiving the world in a highly detailed — gigabit per second — integrated fashion.

See that world around you? It's computed by your brain, locked inside your skull, and made to appear, virtual reality style, as the real world. It's such a flawless illusion that most people don't even get it after it's pointed out.

Mechanisms of consciousness are just now being uncovered by neuroscientists Consciousness seems to require highly recurrent networks with precisely timed spiking neurons (and multiple resonating networks) with tight integration of short-term memory. The common Von Neumann computer architecture is grossly less energy efficient than the brain's billion-fold parallelism.

On the other hand, robotics and computer vision researchers are making astounding progress by emulating the human visual system (Serre et al.) The robotic cars in the DARPA Grand Challenge and Microsoft's Kinect gaming system are great early applications of computer vision. I enthusiastically follow their efforts, but they're the first fledgling steps in a long journey toward the coalescence of perception, imagination, and intelligence underlying human cognition.

Humans went from living on the African savannah 200,000 years ago to walking on the Moon. AI researchers mistakenly thought animal sensory perception was the easy part and that human intelligence was the hard part. They had it backwards. Capturing the perceptual ability of a bird or a mammal has proven to be the most difficult. After all, that capability took evolution hundreds of millions of years to achieve.

Intelligence Explosion: Watson's success will trigger academic and corporate competition that will result in a spate of intelligent systems. These QA systems will reside in the cloud on big server farms at IBM, Google, Apple, Facebook, Microsoft and others. However, they'll be available to all via the internet.

IBM discusses their plans for Watson's DeepQA for healthcare here.

The future is bright (for the machines.) But take heart, fellow humans!

Between our ears, we’ve got the equivalent of a giant server farm that consumes only 20 watts and can be powered by mere coffee and donuts.

Copyleft 2011 BobBlum.com